智谱CogVideoX视频开源大模型

视频创作平台

·

一、资料地址

GitHub - THUDM/CogVideo: Text-to-video generation: CogVideoX (2024) and CogVideo (ICLR 2023)

CogVideo/README_zh.md at main · THUDM/CogVideo · GitHub

二、CogVideo部署与实现

方式1-基于源码部署

步骤一:下载源码

cd /workspace/

git clone https://github.com/THUDM/CogVideo.git步骤二:下载依赖库

cd /workspace/CogVideo/

pip install -r requirements.txt

cd sat

pip install -r requirements.txt

pip install omegaconf步骤三:下载模型库

mkdir THUDM

cd THUDM

git lfs install

git clone https://www.modelscope.cn/ZhipuAI/CogVideoX-5b-I2V.git步骤四:测试

cd ..

cd inference

python cli_demo.py方式2-基于Docker部署

步骤一:安装docker

apt install podman-docker

apt install docker.io步骤二:启动docker

systemctl start docker

systemctl enable docker步骤三:下载

docker run -itd --name=cogvideo -p 7878:7878 --gpus=all registry.cn-hangzhou.aliyuncs.com/guoshiyin/cogvideo:v3

方式3-基于modelscope调用

步骤一:下载依赖库

pip install modelscope

pip install torch

pip install accelerate

pip install sentencepiece

pip install --upgrade opencv-python transformers

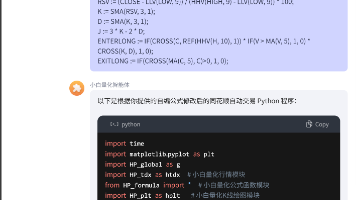

pip install git+https://github.com/huggingface/diffusers.git@878f609aa5ce4a78fea0f048726889debde1d7e8#egg=diffusers # Still in PR步骤二:编写代码

mkdir /workspace/

touch cli.py

vi cli.py 在cli.py中添加代码如下:

# To get started, PytorchAO needs to be installed from the GitHub source and PyTorch Nightly.

# Source and nightly installation is only required until the next release.

import torch

from diffusers import AutoencoderKLCogVideoX, CogVideoXTransformer3DModel, CogVideoXImageToVideoPipeline

from diffusers.utils import export_to_video, load_image

from transformers import T5EncoderModel

from torchao.quantization import quantize_, int8_weight_only

quantization = int8_weight_only

text_encoder = T5EncoderModel.from_pretrained("THUDM/CogVideoX-5b-I2V", subfolder="text_encoder", torch_dtype=torch.bfloat16)

quantize_(text_encoder, quantization())

transformer = CogVideoXTransformer3DModel.from_pretrained("THUDM/CogVideoX-5b-I2V",subfolder="transformer", torch_dtype=torch.bfloat16)

quantize_(transformer, quantization())

vae = AutoencoderKLCogVideoX.from_pretrained("THUDM/CogVideoX-5b-I2V", subfolder="vae", torch_dtype=torch.bfloat16)

quantize_(vae, quantization())

# Create pipeline and run inference

pipe = CogVideoXImageToVideoPipeline.from_pretrained(

"THUDM/CogVideoX-5b-I2V",

text_encoder=text_encoder,

transformer=transformer,

vae=vae,

torch_dtype=torch.bfloat16,

)

pipe.enable_model_cpu_offload()

pipe.vae.enable_tiling()

pipe.vae.enable_slicing()

prompt = "A little girl is riding a bicycle at high speed. Focused, detailed, realistic."

image = load_image(image="input.jpg")

video = pipe(

prompt=prompt,

image=image,

num_videos_per_prompt=1,

num_inference_steps=50,

num_frames=49,

guidance_scale=6,

generator=torch.Generator(device="cuda").manual_seed(42),

).frames[0]

export_to_video(video, "output.mp4", fps=8)步骤三:调试

cd /workspace/

python cli.py

三、视频创作平台

1、清影-智谱

体验地址:智谱清言

提示词

一只熊猫,穿着一件红色的小夹克,戴着一顶小帽子,坐在宁静的竹林里的木凳上。熊猫毛茸茸的爪子拨弄着一把微型原声吉他,发出柔和的旋律。附近,其他几只熊猫聚集在一起,好奇地看着,有些还有节奏地鼓掌。阳光透过高大的竹子,在现场投下柔和的光芒。熊猫的脸很有表情,在玩耍时表现出专注和快乐。背景包括一条小溪和生机勃勃的绿叶,增强了这场独特音乐表演的宁静和神奇氛围。

写实描绘,近距离,猎豹卧在地上睡觉,身体微微起伏

低角度向上推进,缓缓抬头,冰山上突然出现一条恶龙,然后恶龙发现你,冲向你。好莱坞电影风

一只白色小兔子戴着黑框眼镜正在像人一样敲键盘。表情严肃认真,桌子上有一盘月饼,侧写镜头,背景是窗户,夜晚,大大的月亮

其它视频生成平台

2、即梦-字节跳动

3、可灵-快手

4、pixverse-爱诗科技

PixVerse - Create breath-taking videos with PixVerse AI

5、寻光-阿里

参考地址:

更多推荐

已为社区贡献8条内容

已为社区贡献8条内容

所有评论(0)